13 hours and 22 minutes without traffic, 816 miles; The drive from Sacramento to Port Angeles, Washington is not a frequented weekend getaway for most Sacramentans.

Yet for the foreseeable near future, it will be one for me!! My girlfriend joined the United States Coast Guard and is stationed along the Olympic Peninsula. Never would I have thought that I'd have to worry about OPSEC issues and this blog, yet here we are.

Last Friday I played hookie from work, catching a stomach bug from Roomie's mom, a kindergarten teacher. The best lies are ones that weave in bits of truth, and knowing my stammering ass, I needed to make sure I had my story down pat for the inevitable interrogation from HR. Of course, this interrogation would never take place, but I feel more secure when I think through these things. Thursday evening I made sure to make a scene about needing to run out to the car for some pepto bismol for an upset stomach. I think I put on a pretty good act, my coworker offered me some dramamine in case I was feeling nauseous.

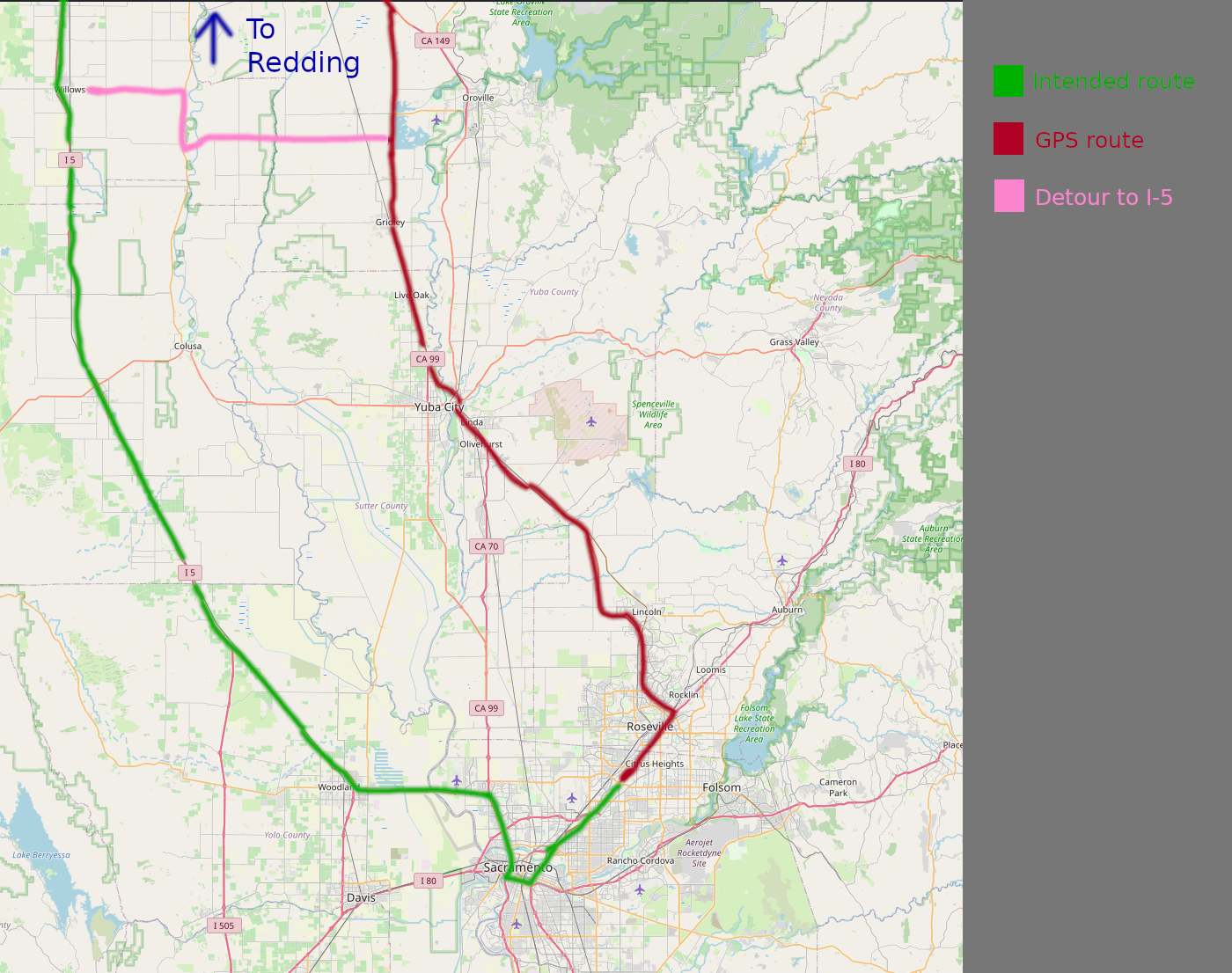

After my shift let out I got on the road right away, no sense in not getting a head start if I wasn't going to be sleeping for the next few hours anyway. I plugged in the closer of the two possible rest stops I was planning on staying at that night, one a half an hour south of Redding. I assumed Google Maps would be smart enough to route me to the more direct of the two paths, but was sorely mistaken. I assumed it would have me loop back a bit on I-80 west, putting me on Highway 50 west for a few exits, before I could get on I-5 North and have a straight shot.

But No!!

I understand why it took me on this route, it's still fairly direct and would require zero back tracking, however what the bots did not account for was the horrible fog. I'm unsure if along the same stretch of I-5 it was just as foggy, but I lean towards no. The visibility was so poor that for sections I had to be moving at <30 mph, well bellow my target velocity to truly shave off time this night. Eventually Google provided a detour that added 15 minutes to the trip, but put me along my desired route, and boy am I glad I took it. As soon as I got on it the fog immediately cleared up and before I knew it I was on I-5 with its 70 mph speed limits. I continued on for a little over an hour before the clock switched over to 01:00 and the lingerings of tiredness started to make themselves known. Fortunately the rest stop I was aiming for was only 15 more minutes down the road.

The Mazda 3 hatchback is not generally considered a good car for road-tripping, but I found it to be plenty comfortable for the night's stay. My camping air mattress always leaks over the course of a few hours and has a tendency for slipping out from beneath me in the night. I had gotten some foam tiles from home depot, the kind you see at gyms or elementary schools. I have to say for $25 it was the best purchase of the trip. Although firm, they were comfortable to fall asleep on and insulating enough to keep me warm; I've had worse nights of sleep on real beds. I had the rear seats laid flat and my head facing the rear of the car, with my feet resting on the center console between the front seats. Not a lot of wiggle room, but didn't require contorting myself or the stiff neck I get whenever I sleep in the front seats.

Before bed, I drafted out the text I would send to my supervisor the next morning, telling him how I wasn't able to keep anything down and didn't get a lick of sleep between trips to the bathroom to hurl. It's a busy time at work and a little head cold wouldn't cut it, nor could I request this time off. After setting my alarms and my head hit the pillow, I quickly slipped into sleep.

Friday morning I awoke, sent the text, called my folks letting them know I was ok, and hit the road. Nothing of note really happened, besides a pleasant interaction with a pump attendant shortly over the Oregon border. I made good time, having purchased all the snacks and drinks I would need ahead of time. I made it onto US-101 (Hood Canal Highway) before sundown and made it to her home around 20:00.

Her beautiful, smiling face was a sight for sore eyes. Even though it was only a few weeks since I had seen her last in person, it felt like it had been ages. We had a wonderful weekend together, she led me through some of the most beautiful landscapes, showing me all the spots she had discovered over with her brief time there so far. It was magical. While I have some commitments I have to wrap up in Sacramento, The gentle whisper of the call of the North has become a beckoning cry.

Saturday evening, we watched the sunset on the beach, the chilly wind biting at our extremities. The beach was right along the Strait of Juan de Fuca, separating us from our Canadian neighbors. As someone who has lived smack dab in the center (latitudinally speaking) of the U.S. for all of my life, being surrounded for hundreds of miles of Californian authority on all sides, crossing into other states feels weird. Being able to look over the narrow strait to another sovereign land was truly peculiar, it made the hair on my neck stand; Not out of fear, just an alien experience. A bald eagle flew over our heads as a reminder of which side we were on, though the raptor held no allegiance to the border as she sailed aloft above the brine and stone.

My tear-filled departure Sunday night was a hard one. Every fiber of my body begged me to stay, and if it weren't for work, I would have. What I would give to be free of the ties to Sacramento I hold, to spend every night with her. Soon this day will come, but for now it will have to be made up for long weekends together.

I made great time through Washington, by 23:00 I was in Oregon, not needing to deal with the traffic through Portland was a godsend. 45 minutes later, It was time for a refuelling stop. As soon as I stepped out the tiredness hit me like a freight train. With only 15 minutes down the interstate past the gas station, I was already longingly looking at the rest stop signs. I know the dangers of tired driving and didn't want to add to the statistics. I passed the first rest stop I saw, but ended 30 minutes shy of the stop I was aiming for.

This night was colder and I was all the more desperate to make it to bed, I layered up and assumed my sleeping position, but the cold night air burned my sinuses and left me with a pounding headache. Once the residual heat of the car's HVAC wisped itself away, it left me in an icebox. What I didn't realise in the dark of the night is that the sleeping bag I was under was inside out, my desperate half-awake attempts to zip it up were futile and I laid there cold; not cold enough to jolt me awake, but not warm enough to sleep through either. At 04:30, I had had enough and forced myself awake so I could fix the sleeping bag and get at least a couple hours of good, restful sleep. I managed to turn it rightside-in and got it halfway zipped up. I slumped back into position and before I knew it, the blare of my phones alarm broke through the deep slumber. In hindsight, I wish I slept one more hour, but at the time I thought I had work in 10 hours with an 8.5 hour drive ahead of me; I was told I we were working on MLK day. My boss who told me was mistaken. 🙄

I started the car and had the heat on high blast as I sipped at my energy drink, shaking away the tiredness of the less-than-restful previous night. Soon the frost that had settled on every surface of the car started to melt away and I was feeling awake enough to hold the car within the lines and let cruise control take care of the rest before the caffeine took hold.

I made it home by 14:30, averaging 26.5 mpg during the trip, a pleasant surprise, and a number that could be brought higher if I didn't joy ride up the winding passes as fast as I did. I really enjoyed the trip and am eagerly planning out my next one. Fortunately, this last semester is going to be purely online, so I only have to contend with work in order to spend time up there.

Someday soon, I will return to the North, without a return trip

I'm flying this week

I hate flying.

You're telling me that I get to be harassed by power-tripping TSA workers, sit at a gate in anxious anticipation to see if my flight will be delayed, get hit with highway robbery for the most basic of goods, and be crammed into a cramped cylinder for hours with sick and screaming children? No thanks!!

But alas, the only way to cross the country in a reasonable amount of time is through flying.

The first leg of my flight out was on a 737 Max 8. The seat left my ass sore and my neck sorer after trying to get some sleep. At least the middle seat was empty so I could stretch out a little more. Also the landing was ROUGH, though the plane has made rougher landings... But having a 60 watt type c port was convenient for keeping the laptop charged.

I'm currently on the second leg. This time, a 737 800, an older plane. Man this seat is so much more comfortable, and I actually have leg room!! However, I am getting a bit of range anxiety for the 3-4 hour bus ride after this, maybe the bus will have a charge port, fingers crossed it's a 120 outlet. Still, I'd say a worthy trade. This is the first flight I've been on where they had to direct people to spread themselves out to balance the plane, we're about 60 bellow capacity; another flight with an empty seat! I should play the lottery with this luck streak!

$5 that this landing is a hell of a lot smoother, no bots to get in the way

Like pretty much everybody in 2016, I watched and loved the first season of Stranger Things

The follow up seasons... not so much

Season 2 felt aimless, but was still charming at times.

Season 3 was fun, and developed Billy's character well.

Season 4 was... Awful. Like I almost didn't want to see season 5 because it was so bad. It was so bad that I powered through a Covid-headache to write this on my gemini site:

From the vaults: Stranger Things 4 was bad

Published: July 2022Like many people in summer 2016, I watched the original Stranger Things on Netflix after hearing positive reviews. Sure enough it was a captivating show with believable drama and decent horror with an adorable cast.

However I have felt disappointed with the subsequent seasons of the show. Season 2 didn't have the same impact as season 1. It lacked cohesion, Finn Wolfhardt was in the popular movie series, It, and so was not available for filming much of the time. Instead they focused on the adults and young adults, developing Hopper and Joyce as well as redeeming Steve's character.

Season 3 of the show was better, I've seen others not like this season as much, but it was undeniably fun and had captivating character arcs. However they undermine the overall impact of Hopper's self-sacrifice by revealing he lived within 10 minutes of his on screen death. The Russians being the enemies was a bit of campy fun and they didn't overplay it and better yet didn't make a political statement.

Season 4 was much more like season 2 than season 3.

Season 4

Starting out with things I liked, Eleven's dark side was interesting. After the opening scene where it appears she has murdered the rest of her test-siblings, it makes you wonder if we can really trust Eleven. Really most of Eleven's solo story and internal battle with Papa was quite good. I also liked how they treated the satanic panic, not making people overly bloodthirsty, most people doubting what the boy pushing it was saying. People didn't suddenly lose their mental capability as soon as Satan was mentioned. I liked Hopper's escape and the way the audience was kept in the dark for his plan. Overall the visuals for the show were quite good. I had far fewer "This is CGI" moments than previous seasons.

But that's about it...

First a few nit-picks

We saw way too much of the villain, initially he looked creepy, but after the fifth time I couldn't help but notice how goofy he looked. Like Jim Carrey's Grinch was shaved and had a feeding tube installed in the side of his neck. Additionally Dustin's banter was a little much sometimes. Finally can we stop naming all the villains after DND villains? It was cute when they were little kids but I can't take them seriously when a bunch of almost adults feel like they need to name the murder demon.

Lets start big picture then work down from there. The overall story was far too disconnected. Half of the kids were in Hawkins, the other half was on a road trip for most of the season, Eleven was doing her own thing, and all the adults were in Russia. They only meet up at the season finale. This had me constantly thinking, "what are they doing again, why do I care about this group of kids?", which is the last thing you want your audience to be asking. Despite the already bloated cast, this did not stop them from adding even more characters!! A stoner who is friends with Jonathan and a metal-loving, DND player who becomes the lead suspect of the murders, Eddie.

Who's that again?

We don't learn anything new about the established cast. I guess that's not true, Max's character has some development, but the fact I forgot about her even existing before I started watching the show this time around makes me not want to include her in the established cast. Will still just wants to be a kid and is still upset with Mike for having a girlfriend, just like last season. Lucas becomes a basketball player in the school's team but that is dropped after episode 3 when he joins back up with the Hawkins kids. The first 3 episodes feel like they were killing time before the actual story starts.

They attempted to have a motivated villain for this season (good), but failed to give him any motivation (bad). There was an underlying message about trauma being bad, but whatever they were trying to say was lost by the tentacle monster man being too distracting. He attacked people who had past trauma and told them they were bad for causing the event. The first girl had bulimia, the second guy had survivors' guilt from a car accident where the other person died, and Max had lost her brother in the finale of the previous season. This was an interesting plot point, seeing as most of the cast could be a target with their own fair share of traumatic experiences. However, this was pretty much dropped all together once we discovered that the villain was actually One; And One's motivation? He was treated poorly by Papa which made him evil and wanting to take over the world by merging it with the upsidedown. The same goal as all the other villains except this time he can speak.

Emotional manipulation

Every few episodes they needed an emotionally wrenching moment and each time it felt undeserved. They would get a close shot of the actor for the scene, the actor would give a half cry about something, sad music would be playing, and I would be almost laughing at how poorly it was done. "WE NEED AN EMOTIONAL MOMENT ON SET, EMOTIONAL MOMENT IN 3 ... 2 ... ok look sad, say how much you love them, a little more tear, ok done". It never felt like the scene was earned, it felt like they needed to move the story forward with a quick heart to heart that then gets thrown away within minutes. The worst part is that this show knows how to have an emotional moment because last season they handled them perfectly. There were only a handful of emotional scenes and all two of them I remember were earned: Hopper's death and Billy's death. Both deeply impacted all the characters of the show in some way. Billy was Max's brother and Hopper acted like a father figure to pretty much all the kids at one point. However with this season, emotional scenes were thrown in willy nilly. Eddie and Steve get one where Eddie sees that Steve is actually a good guy and sees why Dustin likes him. Now mind you it was sweet what he said, but it puts the whole story on halt just for the show to remind us Steve is in fact a good guy, something which has been established since season 2.

Additionally, Eddie's death was idiotic. It's not really explained well why they must kill the bats, I assume it weakens the main villain because he showed pain when the other monsters were killed. Eddie decides instead of running from the fight to plunge himself head-first into certain death. This did not come off as brave or satisfying since he ran away in the first scene, but rather as a naive self-flagellation resulting in pointless death. Since this character has been on screen so little I didn't even really care he died, yet it's written to seem like we should

Homophobia

I hate what they did with the character of Robin. Last season she was a fun addition and served as a good fish-out-of-water compared to the original cast. Last season they revealed she was actually a lesbian, cool, it would have been cooler if Steve had a more negative initial reaction with an ultimate better understanding and acceptance, but Netflix needed to have an additional representation token and I'll let them take it. This season however they completely changed her character, making her a stereotypical band lesbian who also somehow managed to lose any social skills she had between the last season and now. I thought her sexuality was an interesting development to her character and they could do so much given the setting of small-town Indiana, but they threw her away and brought in a bland replacement to get bonus points for.

Russia

The majority of the adult section this season does not work. Joyce decides to leave her entire family to go save Hopper from Russia with a days notice. Now mind you Jonathan is older now and could watch after them, I accept that, but after losing her son multiple times to spooky monsters the past few years you would think she'd be a tad more worried when mysterious murders start occurring. More than that, these scenes are tonally completely different. They feel much more like the campy, fun romp of season 3 than the darker season 4. This is most notable when they cut between the stories; Suddenly after seeing a child get their skull caved in and their arms snapped in two we see a man fight a peanut-butter smuggler using karate on a plane flying into Siberia. It's to the shows detriment that while we have 2 serious stories, the main villain story and Hopper's experience in the camp, we have a very light-hearted, silly story tying them together. It's like hitting an emotional brick wall every time you swap between them. However I did enjoy the goofy parts. This show is best when it's having fun and it had a lot more fun with Joyce and Murray than any other characters.

Hopper's story was actually pretty good. He portrayed having experienced working in a prison camp very well; This was a much wiser Hopper. Everything he did had purpose and he had gotten over some of the pride he had in the first few seasons. Purposefully breaking his ankles to escape was hard to watch but communicated very well the stakes he was willing to go to escape.

The End (I wish)

When it got to the end of season 2 I was wanting more. When it got to the end of season 3 I was wanting it to be over. At the end of season 4 I'm wanting both. It ends on a cliff-hanger where the upsidedown is shown entering into Hawkins. The ultimate climax was a let-down of a battle between One and Eleven where they force-throw each other around a few times. You could tell immediately that this unfortunately would not be the last season of the show. Season 3 felt like it had wrapped up pretty much everything and it should have ended there but they wanted to finish Hopper's story so they had to make season 4. Since nothing much happened in season 4 I feel like I want more from the show. I invested more than 12 hours into this last season, at least give me a cohesive ending that wraps our journey to Hawkins with a nice little bow. Alas we get neither something to look forward to nor a satisfying ending.

I hope they just make season 5 a really long episode that makes this whole last season worth it. I've put too much time into this show and hell I guess I sunk too much time into this to stop watching now.

Now back to 2025

Wow did I get exactly what I asked for!!

Now of course, only the first 4 episodes are currently out, they still have the rest of the season to crash and burn, but I have high hopes.

They managed to effectively transition from that garbage fire of the last season back into the show I remembered watching nearly a decade ago. They don't pretend the previous season didn't happen, but they shy away from its weaker elements.

Robin is an actual character again, still different from season 3, but it doesn't feel like she's just there as bait for Tumblr. I actually really like what they did with her serving as a gay confidant for Will. Will being gay has been poked at since season 1 and them sharing and bonding over their closeted lives feels earnest.

In general, It feels like Will is an actual character for once, where the writers never gave him much to do in previous seasons except for being a sort of damsel in distress whenever the monster of the season was nearby. He has agency.

The characters stories are tied together, while still being split up. Breaking up the characters into smaller groups is necessary with such a large cast, but you don't get whiplash every time the group we're focusing on changes. I feel like each character has had their time in the spotlight.

They have fun with serious situations. Escorting the children out of a tunnel they dig in the bathroom of a military base was simultaneously absurd and grounded enough to feel like there was real stakes.

The story hit the ground running. The intro with Robin summing up the current situation during her radio broadcast cut right down to the quick of the story. It didn't wait around reestablishing what the audience already knows.

They made ham radio look cool!!

The mid season finale left me at the edge of my seat the entire time.

Demogorgans are formidable foes again and having 10 spawn right in front of everybody in the rightsideup was tense, especially Lucas in the tunnels. The use of hand-held cameras as Mike and the crew dodged soldiers and monsters alike added to the anxiety of the moment. While I saw Will stopping the Demogorgans from a mile away, the twist that he has powers of his own was a genuine surprise.

The fake-out self-sacrifice by Hopper made me roll my eyes a bit as they've played that card a few too many times, the reveal that it was 8 behind the door was a great surprise. It seemed like they wanted to ret-con that whole episode out of season 2, which I didn't blame them for, but tying it back in was satisfying as it has been sitting in the back of my mind for the past two seasons.

Overall, I couldn't be happier with how they just stuck the landing after the hit that was season 4.